AI Agent Data Analysis - Proactive AI Agents

AI has already proven its value in helping people draft emails, summarize documents, and answer knowledge-based questions. But when it comes to real business tasks like analyzing data, running reports, or producing reliable insights, simple one-shot prompting quickly shows its limits. Static prompts can’t adapt when the schema changes, an API call fails, or a question requires multiple steps of reasoning.

This is where AI data agents come in, a specific category of AI agents focused on data-centric tasks. They break problems into steps, invoke tools like databases or code interpreters, recover from errors, and adapt their strategies until the task is completed. In practice, this means moving from a single exchange of text to an agentic workflow that looks more like how a human analyst would work: Plan, execute, check results, and refine.

One of the clearest use cases of AI data agents is data analysis, particularly in text-to-SQL workflows. Translating a natural-language question into SQL requires more than language fluency; it demands schema discovery, query generation, error handling, and validation. This article explains AI data agents and explore what makes data agents “proactive”, why this matters for data analysis, and how new frameworks are making these workflows reliable at enterprise scale.

Summary of key concepts: AI Agents for Data Analysis

AI Data Agents

AI data agents are a specialized type of AI agent designed to analyze and interpret data. AI data agents represent a shift from prompt-and-response models to goal-driven software layers. Instead of answering in one shot, they plan, act, learn, and adapt in pursuit of user goals. This makes them especially powerful in domains where success requires multiple steps, tool use, and validation, like data analysis.

Tool orchestration is built in. Rather than forcing engineers to pre-wire every step, modern data agents decide at runtime whether to call a database reader, execute Python, run a BI API, or reach for a vector search. They validate outputs, apply fixes when executions fail, and stitch results back into a coherent, analyst-grade response. In short, data agents move work from “tell me the answer” to “do the work and prove it,” which is why they scale so effectively for real business problems.

Real-world examples include the following:

- LinkedIn SQL Bot enables non-technical staff to query a million-table warehouse via natural language, dynamically discovering schemas and fixing errors along the way.

- WisdomAI is a comprehensive agentic data intelligence platform that integrates into your existing infrastructure. It connects to all your data, enabling real-time insight and governance beyond traditional data warehouses.

- Microsoft 365 Copilot Analyst integrates into familiar productivity tools, allowing employees to analyze data and generate insights without leaving Excel or Teams.

{{banner-large-1="/banners"}}

AI Agents for Data Analysis

Traditional BI workflows rely on manual dashboards: drag-and-drop fields, predefined queries, and static reports. AI agents represent the next leap, from dashboards to delegates. Instead of building a chart manually, you ask a business question (like “why did Q2 churn spike?”) and the agent plans the steps, selects the right data, writes and executes code, tests hypotheses, and presents a narrative explanation, often in minutes.

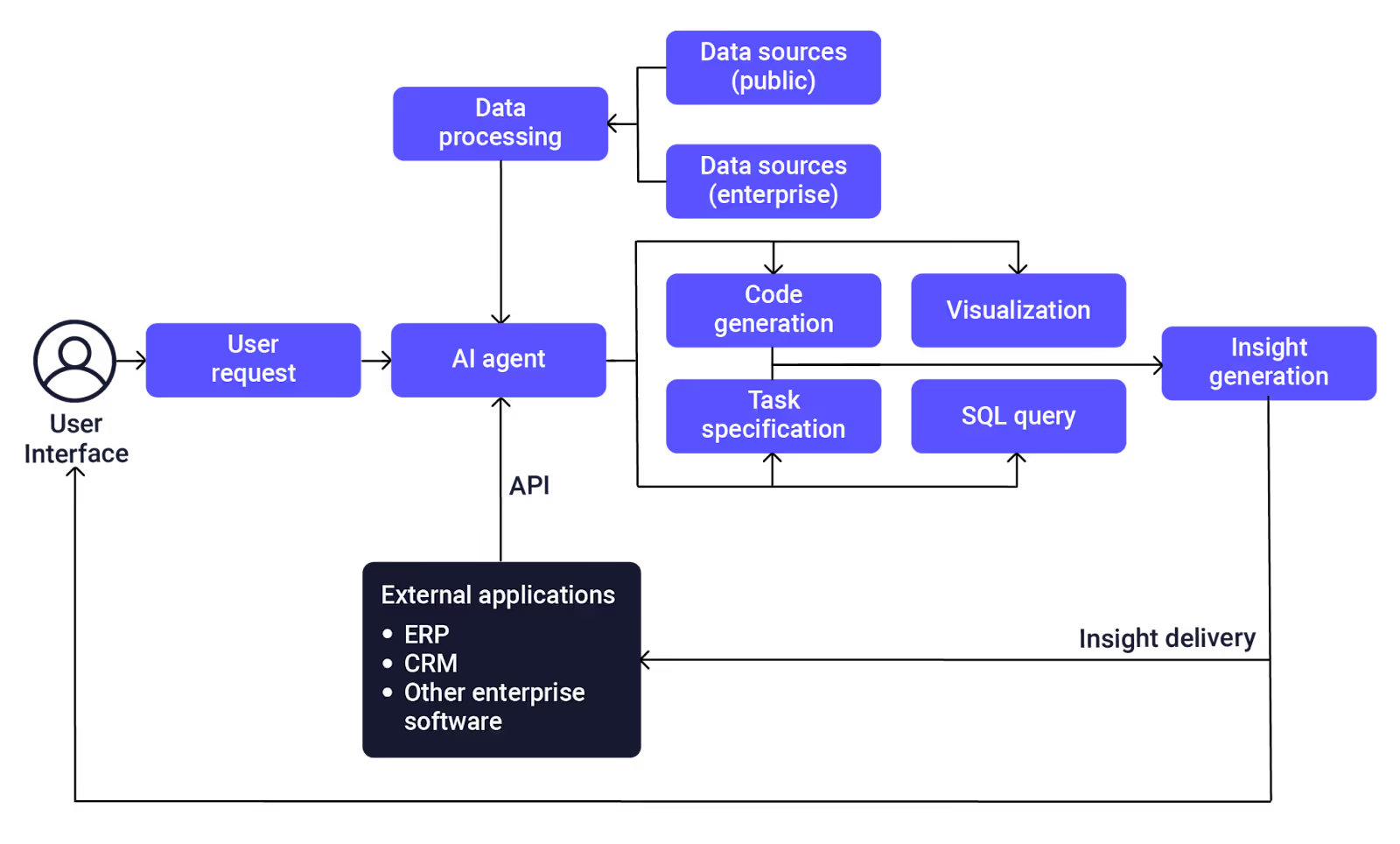

A data-analysis agent typically combines four functional layers.

Text-to-SQL agents

One of the most mature and widely deployed applications of agentic workflows is text to SQL. Here, the agent converts natural language questions into executable database queries, validates the results, and refines when errors occur. To handle enterprise-scale schemas, dynamic schema tools such as /list_tables and /describe_table allow the agent to fetch table and column metadata on demand. This keeps prompts compact and efficient, even when the warehouse contains hundreds of tables.

When queries fail, agents no longer bail out; instead, they apply an error taxonomy to classify the problem—such as a missing column, join mismatch, or syntax error—and then select the appropriate repair strategy. This adaptive loop has been proven at scale inside LinkedIn, where the SQL bot supports hundreds of business users daily. Similarly, AWS shows how enterprises can build scalable text-to-SQL systems using Amazon Bedrock Agents.

Notebook/Python agents

Notebook agents combine LLM reasoning with live code execution. They iterate until the code compiles and the plots render. The planner decides what data to fetch and which analysis to run, the executor runs Python cells (in a sandboxed runtime), and the memory/state layer stores intermediate dataframes for reuse or inspection. A “live code view” lets analysts inspect, modify, or rerun generated snippets, which is important for trust and teachability.

BI-dashboard agents

Beyond SQL queries and notebooks, agents are beginning to reshape the world of business intelligence dashboards. From pulling the right data to generating visualizations and setting refresh schedules, the entire workflow can be automated. To ensure consistency, agents must apply business-metric templates such as standardized definitions for revenue, churn, or NPS, ensuring that dashboards align with enterprise standards rather than ad hoc phrasing. Early demonstrations of the Vertex AI Agent engine show that a complete dashboard can be generated in under sixty seconds, pointing toward a future where business users no longer request dashboards from analysts but instead generate them on demand, with agents handling both the technical and semantic heavy lifting.

AI Agent Building Blocks for Data Analysis

The table below shows the AI agent building blocks for data analysis, with a sample real-world application for a manufacturing firm using the WisdomAI platform.

Meanwhile, large-scale datasets (millions to billions of rows) introduce constraints such as latency, cost, and memory that change how agentic workflows operate. In production, agents avoid pulling whole tables into memory and instead orchestrate computation where the data lives: They discover partitions and table statistics, issue push-down filters and pre-aggregations, and offload heavy transforms to data warehouses. For example, when a manager asks for churn signals across millions of tickets, a big-data orchestration agent checks partitioning and stats, runs a partition-pruned aggregation, returns a fast sampled preview in chat, and—if requested—runs a full-scale analysis in the background to generate complete results and notify the manager when they’re ready.

Examples from real-world platforms

Several platforms today demonstrate agentic workflows in practice, combining natural language understanding, tool orchestration, and adaptive reasoning. OpenAI’s ChatGPT agent enables users to execute complex data analysis, run code, and make API calls all within a single, continuous workflow.

Microsoft Copilot's Analyst Agent acts as a “virtual data scientist” embedded directly within the Microsoft 365 suite. Using a reasoning model fine-tuned on OpenAI’s o3-mini, it allows users to explore complex datasets, execute Python scripts, and create detailed reports, all without requiring advanced data skills.

Modern enterprise AI platforms, such as WisdomAI, are agentic by design. For example, a manager might ask: “Which enterprise customers are at risk of churn based on support tickets and NPS scores from last quarter?” The platform’s agent handles the request end-to-end:

- The agent parses the manager’s question and identifies the required data.

- It discovers the current CRM and support ticket schemas and generates SQL to join customer, support, and NPS data.

- It validates the queries, interprets the results, and visualizes at-risk customers while recommending potential actions.

- It captures feedback from the manager, using the data to improve future recommendations and insights.

AI data agents simplify ad-hoc exploratory data analysis that is inefficient in traditional BI tools. Tableau and Power BI are good at visualizing data in pre-defined dashboards, but where questions are not known in advance, these tools become inefficient and data discoveries are missed. AI data agents provide a conversational and flexible way to analyze data.

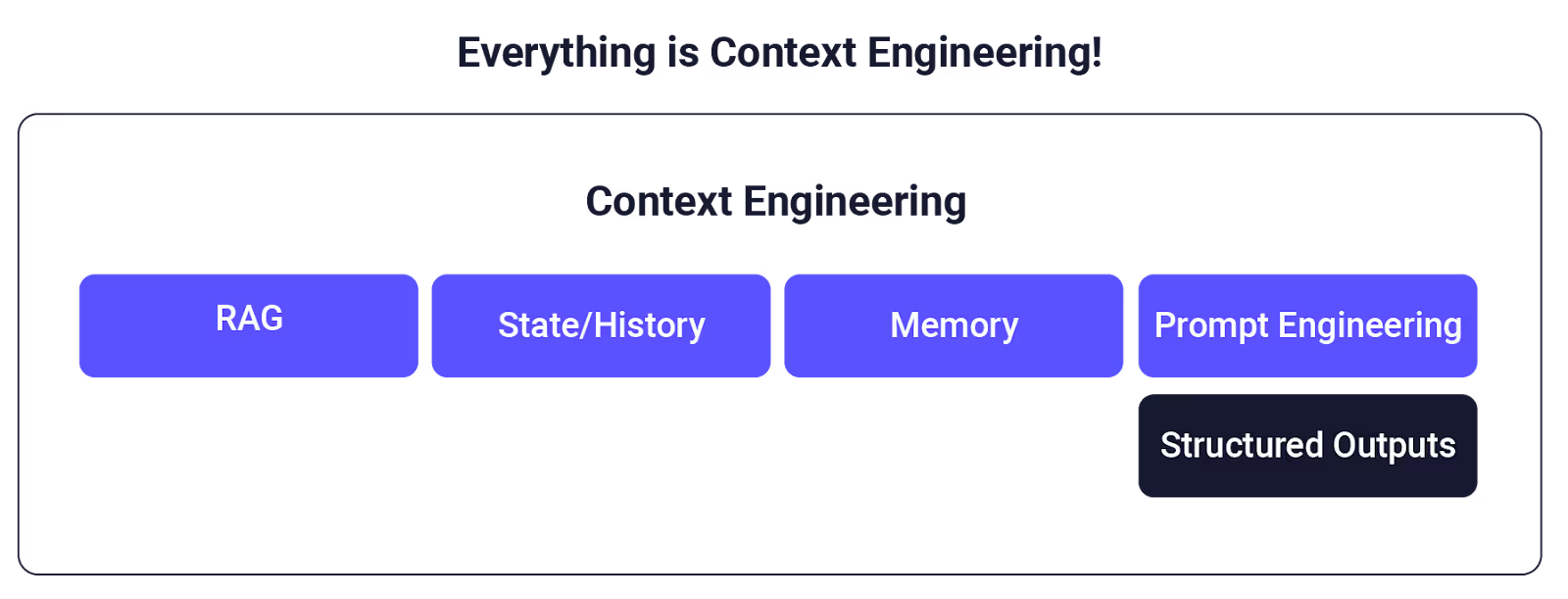

Context Engineering and Domain Knowledge

An agent's performance is inherently tied to the quality and structure of the context it operates within. In data analysis, this means having access to high-quality metadata, well-defined table descriptions, and relevant business rules. CorralData's study demonstrates that integrating just six types of metadata can elevate the correctness of text-to-SQL agents to nearly 85%. You should treat prompts, tools, retrieval, and business rules as one cohesive information environment, as this ensures that agents always see the right facts at the right time.

The 12-Factor Agents methodology further emphasizes the importance of managing the "context window" effectively. Factor 3, titled “own your context window,” advocates for treating prompts, tools, and retrieval sources as a cohesive, managed surface. In a nutshell, “great inputs = great outputs.”

Security and Governance Considerations

AI agents improve capability, but they can also amplify risk because they can traverse multiple systems, execute code, and handle sensitive data, all without direct human oversight. That power demands a security posture that assumes that any tool call or data fetch could be exploited if controls are weak. The foundational best practice is to enforce least-privilege access, granting each agent only the specific OAuth scopes or IAM roles it needs for only as long as it needs them. Pair this with strong session isolation, sandboxing every execution in its own environment to block data leakage between users or tasks.

In addition, observability is critical. Step-level traces enriched with metadata like data classification, tenant ID, and purpose give compliance teams the ability to audit what was accessed, when, and why. These traces also provide an early-warning system for unusual behavior. Finally, emerging standards like MCP and A2A also include ways to encode security metadata directly into the context an agent receives, ensuring safe interoperability across domains.

Proactive AI Data Agents

Today’s AI data agents already automate complex data tasks, proactive agents go a step further as they anticipate changes and needs before problems arise, take initiative without waiting for instructions, and optimize outcomes by acting in advance. Some core capabilities that make AI agents proactive include the following:

- Anticipatory discovery and adaptation: Proactive agents detect evolving data sources, schema changes, or new APIs before they break workflows, proactively updating metadata and templates to stay aligned with changing environments.

- Preemptive error detection and mitigation: Instead of only reacting when failures happen, proactive agents monitor indicators (patterns, metrics) that a failure might occur, take corrective action in advance, and use feedback to avoid similar errors in future tasks.

- Predictive planning and foresight: Proactive agents use predictive models and historical data to forecast possible decision paths, optimize for desirable outcomes, and prepare fallback plans before encountering obstacles.

- Agent coordination: Agents often rely on a team-based approach, sometimes called actor/critic/expert patterns. In this setup, different agents play distinct roles: one might generate a query, another assess its accuracy, and a third refine or validate the outcome.

- Learning over time: Through reinforcement from success/failure cycles and fine-tuning user interaction logs, proactive agents improve with use. Over time, this creates a virtuous loop in which the system gets better at anticipating errors, adapting plans, and aligning outputs with user expectations.

- Autonomous initiation: Rather than waiting for instructions or user prompts, proactive agents can suggest convenient next steps, trigger useful tasks, send reminders, or even perform safe operations themselves when criteria are met. Data Analysis platforms like WisdomAI are embedding Proactive AI Agents to create virtual data analyst colleagues.

{{banner-small-1="/banners"}}

Last thoughts

AI data agents are moving traditional data analysis from pre-defined dashboards designed for visualization to conversational and flexible interfaces that enable non-technical users to perform exploratory data analysis.

What makes an agent proactive is its ability to anticipate user needs and system changes, act in advance of explicit requests, and continuously optimize workflows. Proactive agents monitor context, detect risks and opportunities before they become problems, initiate helpful actions, and ensure that analysis stays accurate and relevant. For data analysis, these capabilities mean agents not only automate manual work but also drive efficiency and insight by staying one step ahead.

However, these capabilities are only as valuable as the infrastructure that supports them. Rich metadata, sandboxed runtimes, and least-privilege access controls provide the safety, auditability, and enterprise readiness agents need to operate at scale and be trusted. Looking ahead, agents will become more composable and interoperable, multi-agent teams will coordinate specialized skills, and open standards will make it easier to combine tools without sacrificing governance.